Modern AI tools have witnessed significant advancements in recent years, driven by breakthroughs in machine learning techniques and computing power. These advancements in machine learning techniques and computing power have synergistically propelled the field of AI forward. They have led to breakthroughs in various applications. As AI tools continue to advance, we can expect further innovations that will drive progress in numerous domains, benefiting industries and society as a whole.

What are AI Tools?

AI tools, or artificial intelligence tools, refer to software applications or platforms that utilize artificial intelligence techniques to perform specific tasks or assist users in various ways. These tools leverage technologies such as machine learning, natural language processing, computer vision, and more to automate processes, analyze data, make predictions, and provide intelligent recommendations. With these attributes, the use of AI has widely proliferated in the following areas in recent times;

- Natural Language Processing (NLP)

- Image and Video Recognition

- Predictive Analytics

- Autonomous Vehicles

- Healthcare Diagnosis and Treatment

- Fraud Detection

- Personalization and Customer Service

The Intelligent Design – How it Works

In summary, the tool collects and analyzes data to process the outcome. In the process, the tool is also capable of “learning” or “training” itself based on the collected data in order to provide better answers for future queries. This is a critical point where sensitive information can leak into the wrong hands. The information that we feed into AI tools is used for further enhancements and is no longer treated as secure. Therefore, it’s imperative that we do not feed any sensitive or business-critical information into AI tools, as they can be used to train the AI model resulting in it being let out at large and appearing at unwanted places, such as in other’s queries.

Information Threats in AI Tools

AI tools are exposed to and used by everyone at large. A massive volume of data is continuously being exchanged via these tools. Unknown to most users, this information is vulnerable to a lot of threats. Some of these threats can be categorized as below:

Data Privacy:

AI tools often require access to large amounts of data for training and analysis. This data can contain sensitive and personal information, making it a prime target for hackers or unauthorized access. If the data is not properly protected, it can lead to privacy breaches and potential misuse of personal information.

Adversarial Attacks:

Adversarial attacks involve manipulating AI systems by intentionally feeding them misleading or malicious input data. This can result in AI systems making incorrect or harmful decisions, potentially leading to security breaches or system vulnerabilities. Adversarial attacks are a significant concern in areas such as image recognition, natural language processing, and autonomous vehicles.

Model Poisoning:

AI models are trained using datasets, and if an attacker can inject malicious or manipulated data during the training process, it can compromise the integrity and security of the AI system. This can lead to biased or inaccurate predictions, unauthorized access, or other malicious activities.

Unauthorized Access and Control:

AI tools often have complex architectures and interfaces that can be susceptible to unauthorized access or control. If an attacker gains unauthorized access, they can manipulate the AI system’s behavior, steal sensitive information, or disrupt its functioning.

Data Bias and Discrimination:

AI tools learn from historical data, and if the training data is biased or reflects discriminatory patterns, the AI models can perpetuate those biases or discrimination in their predictions. This can lead to unfair or discriminatory outcomes, posing ethical and legal risks.

Supply Chain and Third-Party Risks:

AI tools often rely on various components, libraries, or external services provided by third parties. If these dependencies are not properly vetted or secured, they can introduce vulnerabilities or backdoors into the AI system, which can be exploited by attackers.

Shielding from Threats – Importance of uplifting user awareness

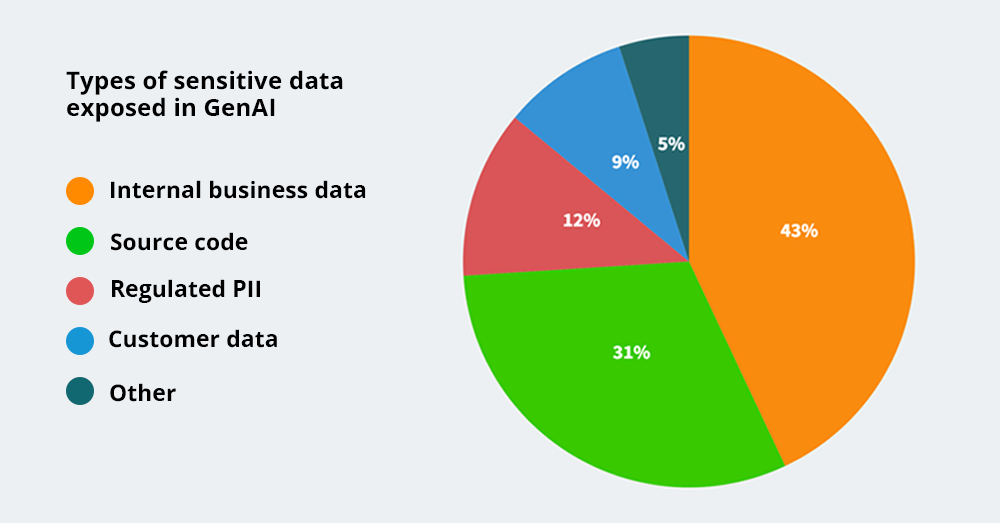

Research [1] shows some existing insights about information exposure to a generative AI tool like chatGTP. It was discovered that a 44% increase in GenAI usage over the last three months alone. Despite this growth, only an average of 19% of an organization’s employees currently utilize GenAI tools. Below graph shows how users had exposed information to AI tools.

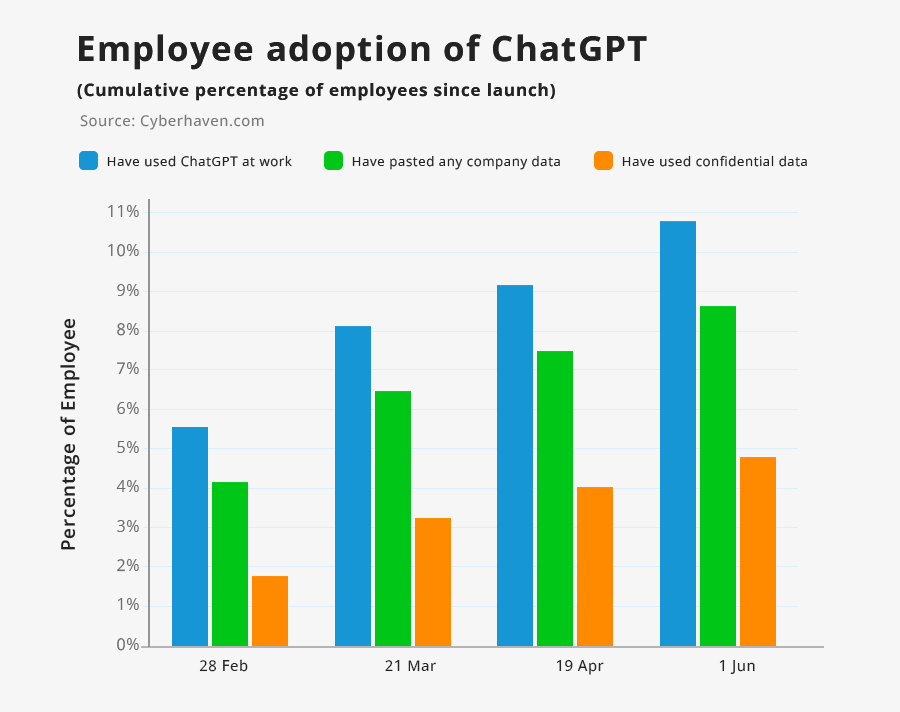

Another research [2] shows user adaptation for AI tools like chatGTP and how they exposed information to tools.

The graph above indicates how users have relied on AI tools to get their work done in recent times.

The graph below shows how they have inadvertently exposed different types of information to AI tools.

Both these research results [1][2] show the information security threats that could arise via AI tools.

Therefore, User Awareness and Education is the key factor to control information security threats. Promote user awareness and education regarding security best practices. By promoting user awareness and education, individuals can become empowered stakeholders in the AI ecosystem, thereby promoting responsible, ethical, and beneficial outcomes for individuals and society. As part of security education, we should provide clear instructions on the safe usage of AI tools and potential security risks. Encourage users to report any security concerns or vulnerabilities they discover. eBuilder Security provides managed information security awareness training through its Complorer training platform. Read more on Complorer via www.complorer.com.

References

- https://thehackernews.com/2023/06/new-research-6-of-employees-paste.html

- https://www.cyberhaven.com/blog/4-2-of-workers-have-pasted-company-data-into-chatgpt/